Virtualization technology and cloud computing have brought a paradigm shift in the way we utilize, deploy and manage computer resources. They allow fast deployment of multiple operating system as containers on physical machines which can be either discarded after use or check pointed for later re-deployment. At European Organization for Nuclear Research (CERN), we have been using virtualization technology to quickly setup virtual machines for our developers with pre-configured software to enable them to quickly test/deploy a new version of a software patch for a given application. This article reports both on the techniques that have been used to setup a private cloud on commodity hardware and also presents the optimization techniques we used to remove deployment specific performance bottlenecks.

The key motivation to opt for a private cloud has been the way we use the infrastructure. Our user community includes developers, testers and application deployers who need to provision machines very quickly on-demand to test, patch and validate a given configuration for CERN’s control system applications. Virtualized infrastructure along with cloud management software enabled our users to request new machines on-demand and release them after their testing was complete.

Implementation

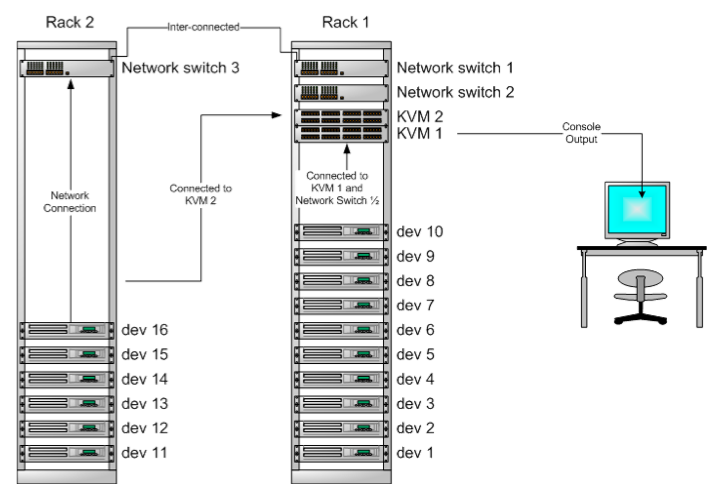

The hardware we use for our experimentation is HP Proliant 380 G4 machines with 8GB of memory, 500 GByte of disk and connected with Gigabit Ethernet. Five servers were running VMWare ESXi bare-metal hypervisor to provide virtualization capabilities. We also evaluated Xen hypervisor with Eucalyptus cloud but given our requirements for Windows VMs, we opted for VMWare ESXi. OpenNebula Professional (Pro) was used as cloud front-end to manage ESXi nodes and to provide users with an access portal.

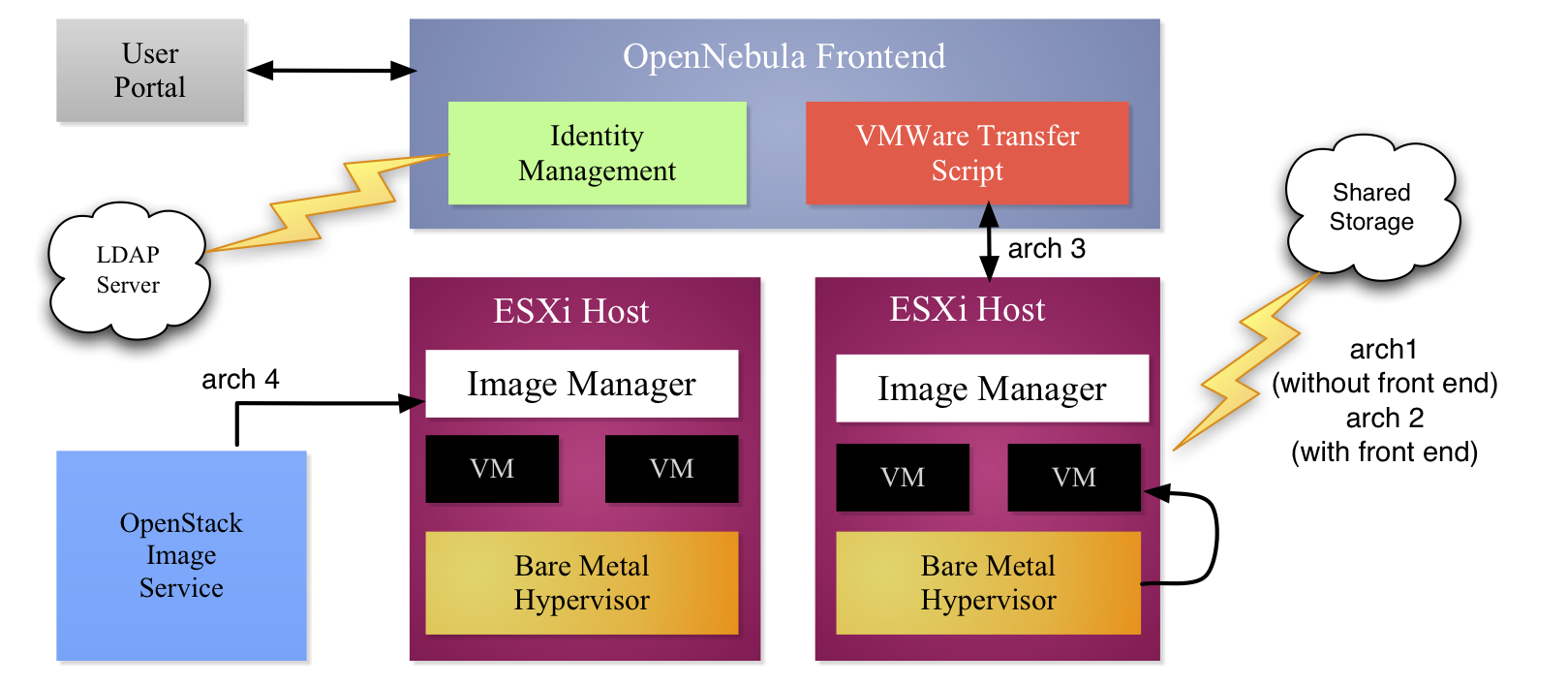

A number of deployment configurations were tested and their performance was benchmarked. The configuration we tested for our experimentation are the following:

- Central storage with front end (arch_1): a shared storage and OpenNebula Pro runs on two different servers. All VM images reside on shared storage all the time.

- Central storage without front end (arch_2): a shared storage, using network filesystem (NFS), shares the same server with OpenNebula front end. All VM images reside on shared storage all the time.

- Distributed storage remote copy (arch_3): VM images are deployed to each ESXi node at deployment time, and copied using Secure Shell (SSH) protocol by front end’s VMWare transfer driver.

- Distributed storage local copy (arch_4): VM images are managed by an image manager service which downloads images pre-emptively on all ESXi nodes. Front end runs on a separate server and setup VM using locally cached images.

Each of the deployment configuration has its advantages and disadvantages. arch_1 and arch_2 use a shared storage model where all VM’s are setup on a central storage. When a VM request is sent to the front end, it clones an existing template image and sets it up on the central storage. Then it communicates the memory/networking configuration to the ESXi server, and pointing the location of the VM image. The advantage of these two architectural configurations is that it simplifies the management of template images as all of the virtual machine data is stored on the central server. The disadvantage of this approach is that in case of a disk failure on the central storage, all the VMs will lose data. And secondly, the system performance can be seriously degraded if shared storage is not high performance and doesn’t have high-bandwidth connectivity with ESXi nodes. Central storage becomes the performance bottleneck for these approaches.

arch_3 and arch_4 tries to overcome this shortcoming by using all available disk space on the ESXi servers. The challenge here is how to clone and maintain VM images at run time and to refresh them when they get updated. arch_3 resolves both of these challenges by copying the VM images at request time to the target node (using the VMWare transfer script add-on from OpenNebula Pro software), and when the VM is shut then the image is removed from the node. For each new request, a new copy of the template image is sent over the network to the target node. Despite its advantages, network bandwidth and ability of the ESXi nodes to make copies of the template images becomes the bottleneck. arch_4 is our optimization strategy where we implement an external image manager service that maintains and synchronize a local copy of each template image on each ESXi node using OpenStack’s Image and Registry service called Glance . This approach resolves both storage and network bandwidth issues.

Finally, we empirically tested all architectures to answer the following questions:

- How quickly can the system deploy a given number of virtual machines?

- Which storage architecture (shared or distributed) will deliver optimal performance?

- What will be average wait-time for deploying a virtual machine?

Results

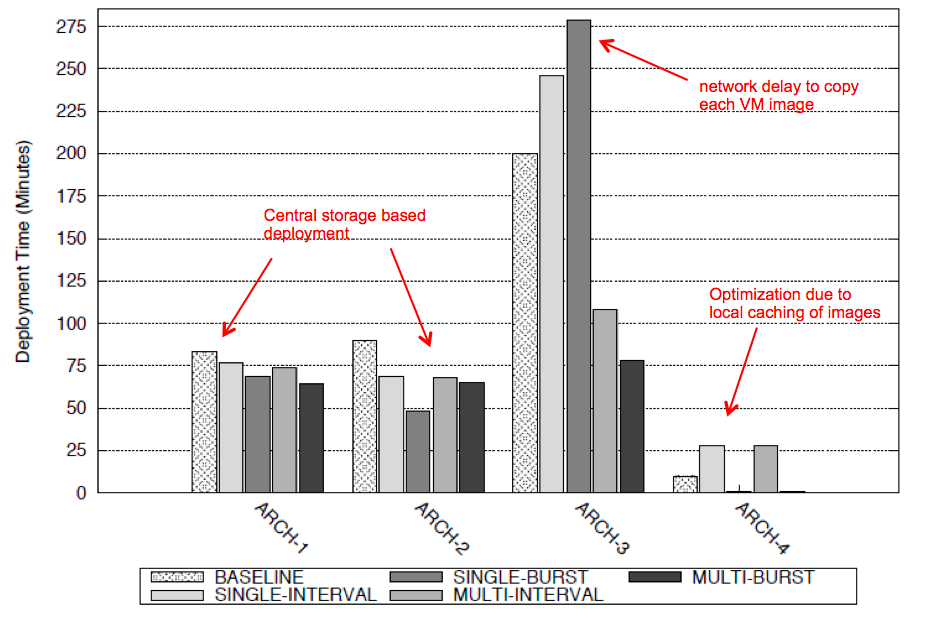

All four different architectures were evaluated for four different deployment scenarios. Each scenario was run three times and the results were averaged and are presented in this section. Any computing infrastructure when used by multiple users goes under different cycles of demand which results in reduced supply of available resources on the infrastructure to deliver optimal service quality.

We were particularly interested in following deployment scenarios where 10 virtual machines (each 10 GB each) were deployed:

- Single Burst (SB): All virtual machines are sent in a burst mode but restricted to one server only. This is the most resource-intensive request.

- Multi Burst (MB): All virtual machines were sent in a burst mode to multiple servers.

- Single Interval (SI): All virtual machines were sent after an interval of 3 mins to one server only.

- Multi Interval (MI): All virtual machines were sent after an interval of 3 mins to multiple servers. This is the least resource-intensive request.

The results shows that by integrating locally cached images and managing them using OpenStack image services, we were able to deploy our VM’s with in less then 5 mins. Remote copy technique is very useful when image sizes are smaller but as the image size increases, and number of VM requests increases; then it adds up additional load on the network bandwidth and increases the time to deploy a VM.

Conclusion

The results have also shown that distributed storage using locally cached images when managed using a centralized cloud platform (in our study we used OpenNebula Pro) is a practical option to setup local clouds where users can setup their virtual machines on demand within 15mins (from request to machine boot up) while keeping the cost of the underlying infrastructure low.

This is a great post! I’d like to try it out in a lab environment. Would it be possible to detail the steps in a tutorial style? Thanks in a advance!