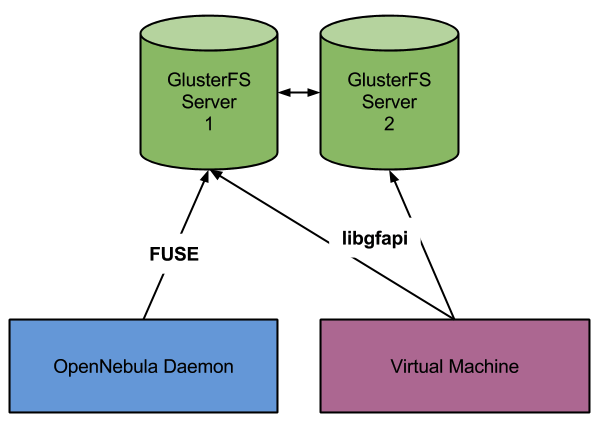

GlusterFS is a distributed filesystem with replica and storage distribution features that come really handy for virtualization. This storage can be mounted as a filesystem using NFS or FUSE adapter for GlusterFS and is used as any other shared filesystem. This way of using it is very convenient as it works the same way as other filesystems, still it has the overhead of NFS or FUSE.

The good news is that for some time now qemu and libvirt have native support for GlusterFS. This makes possible for VMs running from images stored in Gluster to talk directly with its servers making the IO much faster.

The integration was made to be as similar as possible to the shared drivers (in fact uses shared tm and fs datastore drivers). Datastore management like image registration or cloning still use FUSE mounted filesystem so OpenNebula administrators will feel at home with it.

This feature is headed for 4.6 and is already in the git repository and the documentation. Basically the configuration to use this integration is as follows.

- Configure the server to allow non root user access to Gluster. Add this line to ‘/etc/glusterfs/glusterd.vol’:

option rpc-auth-allow-insecure on

And execute this command:

# gluster volume set <volume> server.allow-insecure on - Set the ownership of the files to ‘oneadmin’:

# gluster volume set <volume> storage.owner-uid=<oneadmin uid>

# gluster volume set <volume> storage.owner-gid=<oneadmin gid>

- Mount GlusterFS using FUSE at some point in your frontend:

# mkdir -p /gluster

# chown oneadmin:oneadmin /gluster

# mount -t glusterfs <server>:/<volume> /gluster

- Create shared datastores for images and system and add these extra parameters to the images datastore:

DISK_TYPE = GLUSTER

GLUSTER_HOST = <gluster_server>:24007

GLUSTER_VOLUME = <volume>

CLONE_TARGET="SYSTEM"

LN_TARGET="NONE"

- Link the system and images datastore directories to the GlusterFS mount point:

$ ln -s /gluster /var/lib/one/datastores/100

$ ln -s /gluster /var/lib/one/datastores/101

Now when you start a new VM you can check that in the deployment file it points to the server configured in the datastore. Another nice feature is that storage will fall back to a secondary server in case one of them crashes. The information about replicas is automatically gathered, there is no need to add more than one host (and is currently not supported in libvirt).

If you are interested in this feature it’s a good time to download and compile master branch to test this feature. There is still some time until a release candidate of 4.6 comes out but we’d love to have some feedback as soon as possible to fix any problems that it may have.

We want to thank Netways people for helping us with this integration and testing of the qemu/gluster interface, and to John Mark from the Gluster team for his technical assistance.

mount -t gluster :/ /gluster

should be:

mount -t glusterfs :/ /gluster

Messed me up.

My bad. I’ve just changed the post. Thanks for telling us.

One more thing. Its “gluster volume set storage.owner-gid ”

Not

“gluster volume set storage.owner-gid=”

Subsequently it’s the same for the uid as well.

That messed me up as well.