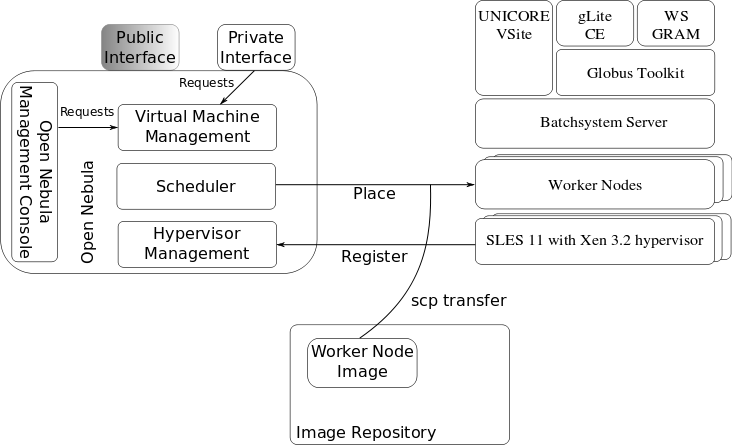

The D-Grid Resource Center Ruhr (DGRZR) was established in 2008 at Dortmund University of Technology as part of the German Grid initiative D-Grid. In contrast to other resources, DGRZR used virtualization technologies from the start and still runs all Grid middleware, batch system and management services in virtual machines. In 2010, DGRZR was extended by the installation of OpenNebula as its Compute Cloud middleware to manage our virtual machines as a private cloud.

Physical resources:

DGRZR consists of 256 HP blade servers with eight CPU cores (2048 cores in total) and 16 Gigabyte RAM each. The disk space per server is about 150 Gigabytes. 50% of this space is reserved for virtual machine images. The operating system on the physical servers is SUSE Enterprise Linux (SLES) 10 Service Pack 3 and will be changed to SLES 11 in the near future. We provide our D-Grid users with roughly 100 terabytes of central storage, mainly for home directories, experiment software and for the dCache Grid Storage Element. In 2009, the mass storage was upgraded by adding 25 terabyte of HP Scalable File Share 3.1 (a Lustre-like file system) and is currently migrated to version 3.2. 250 of the 256 blade servers will typically be running virtual worker nodes. The remaining servers run virtual machines for the Grid middleware services (gLite, Globus Toolkit and UNICORE), the batch system server, and other management services.

Networking:

The network configuration of the resource center is static and assumes a fixed mapping from the MAC of the virtual machine to its public IP address. For each available node type (worker nodes, Grid middleware services and management services) a separate virtual LAN exists and DNS names for the possible leases have been setup in advance in the central DNS servers of the university.

Image repository and distribution:

The repository consists of images for the worker nodes based on Scientific Linux 4.8 and 5.4, UNICORE and also Globus Toolkit services. We will soon be working on creating of images for the gLite services.

The master images that are cloned to the physical servers are located on a NFS server and are kept up to date manually. The initial creation of such images (including installation and configuration of Grid services) is currently done manually, but will be replaced in near future by automated workflows. The distribution of those images to the physical servers happens on demand and uses the OpenNebula SSH transfer mechanism. Currently we have no need for pre-staging virtual machine images to the physical servers, but we may add this using scp-wave.

The migration of virtual machines has been tested in conjunction with SFS 3.1, but production usage has been postponed until the completion of the file system upgrade.

OpenNebula:

The version currently used is an OpenNebula 1.4 GIT snapshot from March 2010. Due to some problems of SLES10 with Xen (e. g. “tap:aio” not really working) modifications to the snapshot were made. In addition to this, we setup the OpenNebula Management Console and use it as a graphical user interface.

The SQLite3 database back-end performs well for the limited number of virtual machines we are running, but with the upgrade to OpenNebula 1.6 we will migrate to a MySQL back-end to prepare for an extension of our cloud to other clusters. Using Haizea as lease manager seems out of scope at the moment. With the upcoming integration of this resource as D-Grid IaaS resource, scheduler features like advanced reservations are mandatory.

Stefan Freitag (Robotics Research Institute, TU Dortmund)

Florian Feldhaus (ITMC, TU Dortmund)

0 Comments