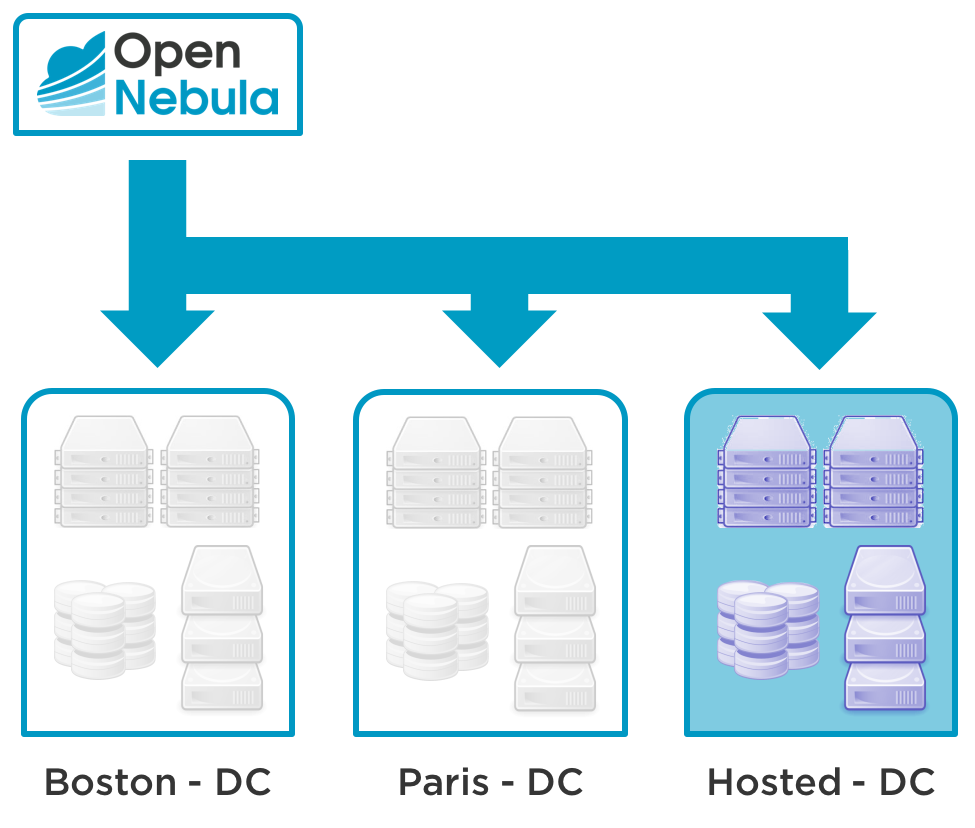

During the last months we have been working on a new internal project to enable disaggregated private clouds. Our aim is to provide the tools and methods needed to grow your private cloud infrastructure with physical resources, initially individual hosts but eventually complete clusters, running on a remote bare-metal cloud providers.

Two of the use cases that will be supported by this new disaggregated cloud approach will be:

- Distributed Cloud Computing. This approach will allow the transition from centralized clouds to distributed edge-like cloud environments. You will be able to grow your private cloud with resources at edge data center locations to meet latency and bandwidth needs of your workload.

- Hybrid Cloud Computing. This approach works as an alternative to the existing hybrid cloud drivers. So there is a peak of demand and need for extra computing power you will be able to dynamically grow your underlying physical infrastructure. Compared with the use of hybrid drivers, this approach can be more efficient because it involves a single management layer. Also it is a simpler approach because you can continue using the existing OpenNebula images and templates. Moreover you always keep complete control over the infrastructure and avoid vendor lock-in.

There are several benefits of this approach over the traditional, more decoupled hybrid solution that involves using the provider cloud API. However, one of them stands tall among the rest and it is the ability to move offline workload between your local and rented resources. A tool to automatically move images and VM Templates from local clusters to remote provisioned ones will be included in the disaggregated private cloud support.

In this post, we show a preview of a prototype version of “oneprovision”, a tool to deploy and add to your private cloud instances new remote hosts from a bare-metal cloud provider. In particular, we are working with Packet to build this first prototype.

Automatic Provision of Remote Resources

A simple tool oneprovision will be provided to deal with all aspects of the physical host lifecycle. The tool should be installed on the OpenNebula frontend, as it shares parts with the frontend components. It’s a standalone tool intended to be run locally on the frontend, it’s not a service (for now). The use is similar to what you may know from the other OpenNebula CLI tools.

Let’s look at a demo how to deploy an independent KVM host on Packet, the bare metal provider.

Listing

Listing the provisions is a very same as listing of any other OpenNebula hosts.

$ onehost list ID NAME CLUSTER RVM ALLOCATED_CPU ALLOCATED_MEM STAT 0 localhost default 0 0 / 400 (0%) 0K / 7.5G (0%) on $ oneprovision list ID NAME CLUSTER RVM PROVIDER STAT

Based on the listings above, we don’t have any provisions and our resources are limited just on the localhost.

Provision

Adding a new host is as simple as running a command. Unfortunately, the number of parameters required to specify the provision would be too much for the command line. That’s why most of the details are provided in a separate provision description file, a YAML formatted document.

Example (packet_kvm.yaml):

---

# Provision and configuration defaults

provision:

driver: "packet"

packet_token: "********************************"

packet_project: "************************************"

facility: "ams1"

plan: "baremetal_0"

billing_cycle: "hourly"

configuration:

opennebula_node_kvm_param_nested: true

##########

# List of devices to deploy with

# provision and configuration overrides:

devices:

- provision:

hostname: "kvm-host001.priv.ams1"

os: "centos_7"

Now we use this description file with the oneprovision tool to allocate new host on the Packet, seamlessly configure the new host to work as the KVM hypervisor, and finally add into the OpenNebula.

$ oneprovision create -v kvm -i kvm packet_kvm.yaml ID: 63

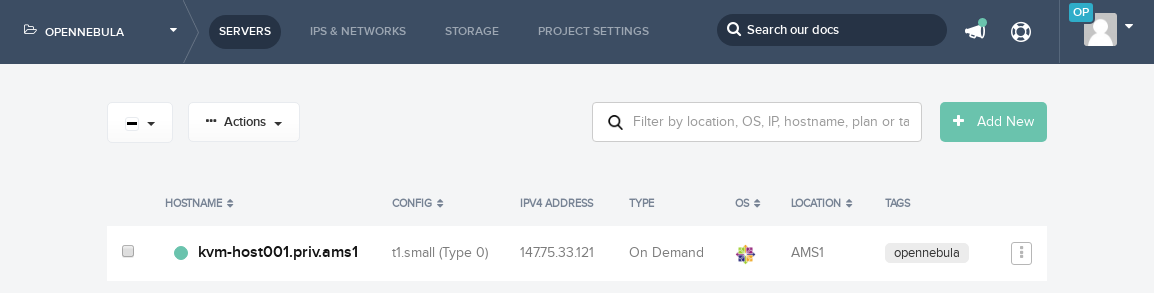

Now, the listings show our new provision.

$ oneprovision list ID NAME CLUSTER RVM PROVIDER STAT 63 147.75.33.121 default 0 packet on $ onehost list ID NAME CLUSTER RVM ALLOCATED_CPU ALLOCATED_MEM STAT 0 localhost default 0 0 / 400 (0%) 0K / 7.5G (0%) on 63 147.75.33.121 default 0 0 / 400 (0%) 0K / 7.8G (0%) on

You can also check your Packet dashboard to see the new host.

Host Management

The tool provides a few physical host management commands. Although you can still use your favorite UI, or provider specific CLI tools to meet the same goal, the oneprovision also deal with the management of the host objects in the OpenNebula.

E.g., if you power off the physical machine via oneprovision, the related OpenNebula host is also switched into the offline state, so that the OpenNebula doesn’t waste time with monitoring the unreachable host.

You will be able to reset the host.

$ oneprovision reset 63

Or, completely power off and resume any time later.

$ oneprovision poweroff 63 $ oneprovision list ID NAME CLUSTER RVM PROVIDER STAT 63 147.75.33.121 default 0 packet off $ oneprovision resume 63 $ oneprovision list ID NAME CLUSTER RVM PROVIDER STAT 63 147.75.33.121 default 0 packet on

Terminate

When the provision isn’t needed anymore, it can be deleted. The physical host is both released on the side of the bare metal provider and the OpenNebula.

$ oneprovision delete 63 $ oneprovision list ID NAME CLUSTER RVM PROVIDER STAT $ onehost list ID NAME CLUSTER RVM ALLOCATED_CPU ALLOCATED_MEM STAT 0 localhost default 0 0 / 400 (0%) 0K / 7.5G (0%) on

Stay tuned for the release of this first feature of our new cloud disaggregation project, and as always we will be looking forward to your feedback!

0 Comments